The Higgs boson is a hypothetical particle, a boson, that is the quantum of the Higgs field. The field and the particle provide a testable hypothesis for the origin of mass in elementary particles. In popular culture, the Higgs boson is also called the God particle, after the title of Nobel physicist Leon Lederman’s The God Particle: If the Universe Is the Answer, What Is the Question? (1993), which contained the author’s assertion that the discovery of the particle is crucial to a final understanding of the structure of matter.

The existence of the Higgs boson was predicted in 1964 to explain the Higgs mechanism—the mechanism by which elementary particles are given mass. While the Higgs mechanism is considered confirmed to exist, the boson itself—a cornerstone of the leading theory—had not been observed and its existence was unconfirmed. Its tentative discovery in 2012 may validate the Standard Model as essentially correct, as it is the final elementary particle predicted and required by the Standard Model which has not yet been observed via particle physics experiments. Alternative sources of the Higgs mechanism that do not need the Higgs boson also are possible and would be considered if the existence of the Higgs boson were to be ruled out. They are known as Higgsless models.

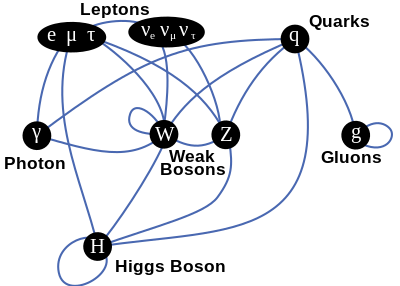

The Higgs boson is named after Peter Higgs, who was one of six authors in the 1960s who wrote the ground-breaking papers covering what is now known as the Higgs mechanism and described the related Higgs Field and boson. Technically, it is the quantum excitation of the Higgs field, and the non-zero value of the ground state of this field gives mass to the other elementary particles such as quarks and electrons through the Higgs mechanism. The Standard Model completely fixes the properties of the Higgs boson, except for its mass. It is expected to have no spin and no electric or color charge, and it interacts with other particles through the weak interaction and Yukawa-type interactions between the various fermions and the Higgs field.

Because the Higgs boson is a very massive particle and decays almost immediately when created, only a very high energy particle accelerator can observe and record it. Experiments to confirm and determine the nature of the Higgs boson using the Large Hadron Collider (LHC) at CERN began in early 2010, and were performed at Fermilab's Tevatron until its close in late 2011. Mathematical consistency of the Standard Model requires that any mechanism capable of generating the masses of elementary particles become visible at energies above 1.4 TeV; therefore, the LHC (designed to collide two 7 TeV proton beams, but currently running at 4 TeV each) was built to answer the question of whether or not the Higgs boson exists.

On 4 July 2012, the two main experiments at the LHC (ATLAS and CMS) both reported independently the confirmed existence of a previously unknown particle with a mass of about 125 GeV/c2 (about 133 proton masses, on the order of 10-25 kg), which is "consistent with the Higgs boson" and widely believed to be the Higgs boson. They acknowledged that further work would be needed to confirm that it is indeed the Higgs boson and not some other previously unknown particle (meaning that it has the theoretically predicted properties of the Higgs boson) and, if so, to determine which version of the Standard Model it best supports.

Overview

In particle physics, elementary particles and forces give rise to the world around us. Physicists explain the behaviors of these particles and how they interact using the Standard Model—a widely accepted framework believed to explain most of the world we see around us. Initially, when these models were being developed and tested, it seemed that the mathematics behind those models, which were satisfactory in areas already tested, would also forbid elementary particles from having any mass, which showed clearly that these initial models were incomplete. In 1964 three groups of physicists almost simultaneously released papers describing how masses could be given to these particles, using approaches known as symmetry breaking. This approach allowed the particles to obtain a mass, without breaking other parts of particle physics theory that were already believed reasonably correct. This idea became known as the Higgs Mechanism (not the same as the boson), and later experiments confirmed that such a mechanism does happen—but they could not show exactly how it happens.

The leading and simplest theory for how this effect takes place in nature was that if a particular kind of "field" (known as a Higgs Field) happened to permeate space, and if it could interact with fundamental particles in a particular way, then this would give rise to a Higgs Mechanism in nature, and would therefore create around us the phenomenon we call "mass". During the 1960s and 1970s the Standard Model of physics was developed on this basis, and it included a prediction and requirement that for these things to be true, there had to be an undiscovered boson—one of the fundamental particles—as the counterpart of this field. This would be the Higgs boson. If the Higgs boson was confirmed to exist, as the Standard Model suggested, then scientists could be satisfied that the Standard Model was fundamentally correct. If the Higgs boson was confirmed as not existing, then other theories would be considered as candidates instead.

The Standard Model also made clear that the Higgs boson would be very difficult to demonstrate. It exists for only a tiny fraction of a second before breaking up into other particles—so quickly that it cannot be directly detected—and can be detected only by identifying the results of its immediate decay and analyzing them to show they were probably created by a Higgs boson and not some other reason. The Higgs boson requires so much energy to create (compared to many other fundamental particles) that it also requires a massive particle accelerator to create collisions energetic enough to create it and record the traces of its decay. Given a suitable accelerator and appropriate detectors, scientists can record trillions of particles colliding, analyze the data for collisions likely to be a Higgs boson, and then perform further analysis to test how likely it is that the results combined show a Higgs boson does exist, and that the results are not just due to chance.

Experiments to try and show whether the Higgs boson did or did not exist began in the 1980s, but until the 2000s it could only be said that certain areas were plausible, or ruled out. In 2008 the Large Hadron Collider (LHC) was inaugurated, being the most powerful particle accelerator ever built. It was designed especially for this experiment, and other very high energy tests of the Standard Model. In 2010 it began its primary research role which was to prove whether or not the Higgs boson existed.

In late 2011 two of the LHC's experiments independently began to suggest "hints" of a Higgs boson detection around 125 GeV (the unit of particle mass). In July 2012 CERN announced[1] evidence of discovery of a boson with an energy level and other properties consistent with those expected in a Higgs boson. The available data raised a high statistical likelihood that the Higgs boson had been detected. Further work is necessary for the evidence to be considered conclusive (or disproved). If the newly discovered particle is indeed the Higgs boson, attention will turn to considering whether its characteristics match one of the extant versions of the Standard Model. The CERN data include clues that the additional bosons or similar-mass particles may have been discovered as well as, or instead of, the Higgs itself. If a different boson were confirmed, it would allow and require the development of new theories to supplant the current Standard Model.